Introduction

Background: MCMAS & ISPL

MCMAS - MCMAS1 is a Model Checker for Multi-Agent Systems. Like other model checkers, it explores the state space of system specifications to prove or disprove properties of those systems. Working with model checkers usually feels kind of like trying to code directly in set theory.. but MCMAS is unusual in that it can use Agents as a primitive, and supports using temporal epistemic logic to describe facts about knowledge and belief.

ISPL - Multi-Agent Systems are described in the Interpreted Systems Programming Language. ISPL is actually a specification language2, and besides primitives like Agents and Environments, it also supports Evolutions, Fairness, Invariants, Groups, Protocols, and States. Specifications in ISPL can make assertions about predicates and possible worlds. Available temporal logic operators are AG / EF / AX and epistemics operators include K / GK /GCK / DK.

Resources - See the upstream official page at SAIL3 or the unofficial mirror4 for engine source (C++). Check out the paper1 or the user manual5 (both pdf). ISPL examples that ship with the engine source are here6. See the local docs for a lighter, tutorial-style intro. For a gentle introduction to epistemic logic see this crash course. See these links for a growing collection of other background reading.

Introducing PY-MCMAS

py-mcmas is a python wrapper for the mcmas model-checking engine. It also has some support for parsing, analysis, and code-generation of the ISPL specification language, both via the CLI and via the API. Here's a high-level overview of some of the data-flow:

Main Features

Containerized Engine - Execute ISPL anywhere, from/to any of the formats above. Avoids platform-specific details for building and running the

mcmasbackend so that it won't become a dependency. 7

Pythonic Specifications - Write ISPL Specs directly in Python or use any combination of strings, lists, dicts, pydantic models, or SymPy Expressions in mixed-mode.

Supported Transforms - Convert JSON ↔ ISPL, Sympy Expressions ↔ Modal Logic, PythonDicts & PydanticModels ↔ ISPL

Analysis & Symbol Import/Export - Validate ISPL Programs or Fragments, Convert SymPy symbols ↔ ISPL Actions/States ↔ JSON. Export witnesses, counter-examples, & traces as JSON. Heuristics for analyzing complexity of logical expressions.

Structured Input - More pathways and datastructures opens up the possibility of driving the MCMAS engine without explicitly using ISPL. See the road map for more detailed discussion about how this might be used.

Structured Output - Because downstream processes might want to pass/fail model candidates, or act based on witnesses10, or want to avoid parsing text to figure out which model properties hold.

Model-Checking & Agentic AI

Perhaps you've only read this far because you're trying to figure out if this is related to agentic AI?

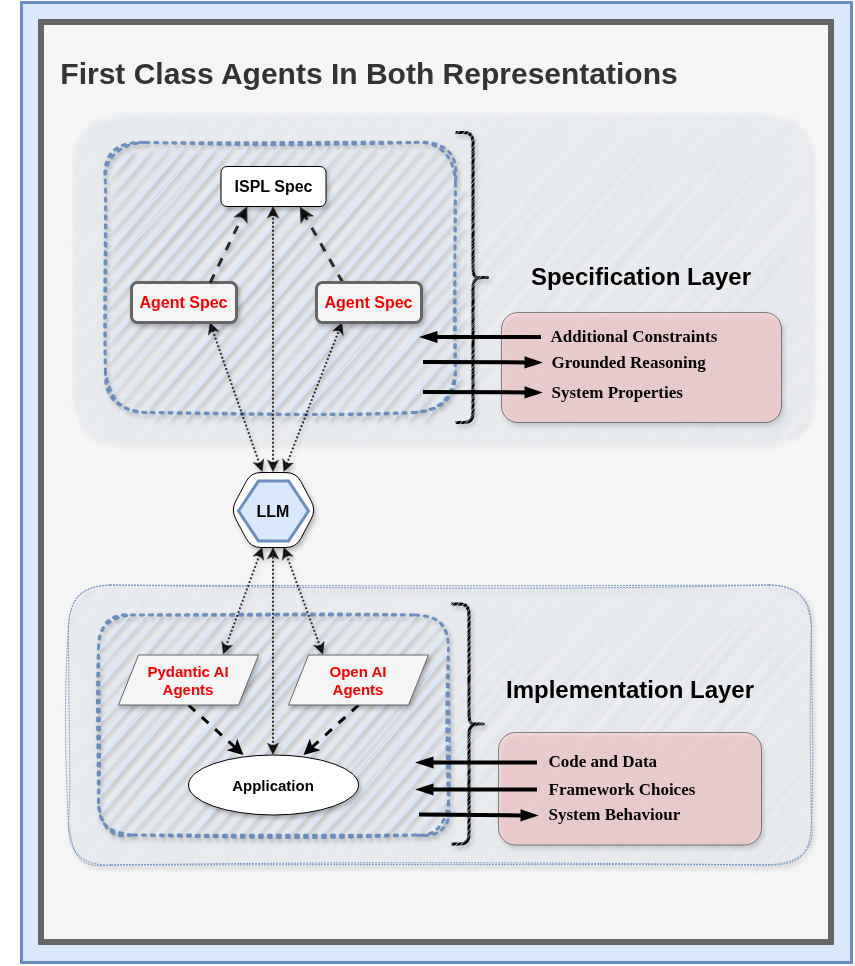

Actually py-mcmas does have experimental features along these lines. Those features are built on the stable core above, mostly by leveraging the pydantic data-model and adding in pydantic_ai. You can think of this part of py-mcmas as a proof of concept, or a living lab notebook.. stability is not guaranteed! Work in progress goes in a few directions, but you could say the main goal is to explore bridging the gap between implementation-layers and specification-layers using LLMs.

Read on in this section for the motivation/background, or jump directly to discussion of features.

Multiple Representations

Let's start with the impossible dream. Suppose you could wave a magic wand that works as a anything-to-anything converter. What would you do with it? Maybe whenenever you wanted to build stuff, you'd just pick your desired level of descriptive rigor, then convert losslessly between other faithful representations for free.

Opinions will differ as to whether this is an impossible dream or an imminent reality!

The right kind of specification layer and exactly how "formal" it should be is up for debate, but there's some growing feeling that "specs are the new code"22. The next sections try to nail down terminology and talks about Which Formalism? in more detail. But for now.. if it helps to fill in the black box, you can substitute "project test-Suite" for "formal model". Let's even assume that things like structured markdown and metadata from bug-trackers might also qualify, but that more structure is better.

No matter what specifications should look like, the actual workflow of tracking multiple representations already happens with coding agents and in model training.13 This goes to show that the specification layer is always there whether we're thinking about it or not. Friction between representations is still very real, but so is the progress14. Anyway, a few observations about this setup:

The Case for Formal Specifications

- Grounded reasoning and useful constraints have clear benefits and applications. There's general interest in things like fewer hallucinations, more interpretability, less magic & more determinism, as well as easy access to specialized validators & solvers.

- Code-generation from spec-to-implementation or from implementation-to-spec, raises other questions. Can we validate one side from the other? Can we work with both sides at the same time in a way that's mutually reinforcing, or for lack of a better term, "cogenerative" in interesting ways? To the extent that a successful abstraction is also a compression, can we use that to create extra room in context windows?

- Creating and updating explicit domain-models is a good idea. The best domain models are ones that can be weighed and measured, preferably ones that have some structure that we can exploit (e.g. syntax). Bonus points if you can propose, reject, and evolve those models.

- General problem-solving is also served here, and this isn't just about code-generation. The "implementation layer" can be regarded as optional or seen as answered by way of artifacts that fall out of the specification itself (like witnesses, evidence, or strategy invariants). Creating and updating structured domain models in the background also seems useful. As a kind of intermediate step in reasoning, or as an aid to research, what can we do with LLMs that are constrained to return only executable specifications in the form of model-checker inputs, or games, or simulations?

Specs are the New Code

Ok, but what is a spec? Let's back up and try to fill in blanks from the last section.

- The Classic Definition:

- Wikipedia15 emphasizes the "what, not how" aspect of specifications, and goes on to mention aspects such as whether descriptions are adequate, internally consistent, unambiguous, complete, satisfied, minimal. To evaluate a potential specification, it suggests considering criteria like: constructability, manageability, evolvability, usability, communicability, and the capacity for powerful & efficient analysis. The related article on formal methods24 starts off by mentioning "mathematically rigorous techniques".

Something like ISPL ranks pretty highly on the classic criteria:

Meanwhile, there's a tension here with what's happening on the ground23 22 in some sense, and how "informal" things like project test-suites, diagrams, structured markdown plans, and bug-tracker metadata fit in. What to make of that?

Partly it turns out that "mathematically rigorous" is actually a fairly low bar. For example: The main obstacle for wrapping metrics / state-machines / workflows around our informal systems is curating, converting, and querying all of the data involved. Thus even with a partially working "anything-to-anything" converters, all those things become easier.

Besides using LLMs to pull an informal system towards a formal model, you can also use LLMs to make a relaxed or incomplete formal specification useful.

As formal vs informal becomes less distinct14distracting.

But, formal vs informal isn't actually the point of multiple representations in spec / implementation layers.

The real benefits of multiple representations A better analogy is autocatalytic sets or hypercycles,

Markdown-planning

Pretty formal, but also maybe more interesting to a coputer scientist or an economist than it is a software engineer.

You could say that formal vs informal is a distraction isn't really the point of multiple representations though. It's Think of it more in terms of

Some these with the idea of formal specifications / models. What is more interesting -suite or the project documentation / diagrams / bug-tracker / So, are we going to count test-suites,

Which Formalism?

So, which formalism? The best answer is all the formalisms, because roughly speaking, they do tend to compose. For example, sticking with the test-suite analogy.. the project suite passes if and only if the unit-tests / integration-test suites both passed. To that end, the fmtk module describes abstract interfaces that could possibly be used with other solvers/provers/games/simulations in the future.

No really, which formalism? In practice though, py-mcmas is focused on ISPL as the formalism of choice. To review, this is a high-level view of the neighborhood.

So in practice, you work on documentation, diagrams, code, or tests, then expect to be able to "synchronize" semantically equivalent work to the other domains you didn't touch.

ISPL has it's own limitations and issues which we'll get into momentarily, but agent-based modeling12 is a natural fit for a wide variety of problems, and scores pretty highly on the criteria discussed. Note that ISPL doesn't really need to replace a test-suite any more than the test-suite replaces the project code. The question is, does co-generation between all 3 representations (i.e. of spec / test-suite / code-base) work better than only using 2? 25

So far, this might seem like an argument for formal specifications in general. But the intriguing part about stealing ideas from ABM / MAS is that we can get a "first class" agent abstraction on both sides of the representational hierarchy.

To illustrate the idea, let's consider another version of the "impossible dream" diagram.

There are two really basic issues:

What is an agent, anyway? - Agent is tough to define, and means different things to different people. There's the multi-agent system11 sense and the agent based modeling12 sense, and these things are great for a variety of reasons, but that work also isn't related to what most people mean when they talk about agentic AI.

Specification languages aren't programming languages. - Proving properties about systems is really nice, but it usually doesn't step you closer to actually building that system. And if you do have a running system already.. modeling it is basically a separate activity that starts from scratch.

In both cases, you could say that there's a gap between theory and practice. One might ask.. does it have to be that way?

Formalisms could see more industry adoption if they were easier to use. Meanwhile agentic-AI could benefit from using more rigorous formalisms, both at the system layer in terms of design, and in implementation layers, at the level of agentic code and agentic frameworks. Building bridges that connect classic MAS research with agentic-AI is a big project. Bridging the gap between between programming languages and specification languages in useful ways is an ever bigger project! But isn't it about time to combine the strengths of these approaches?

One goal for py-mcmas is to function as kind of lab (and lab notebook!) for exploring related ideas.

The next section has more discussion on motivation and use-cases. If you dislike informal remarks on formal methods, skip it and feel free to jump into some demo code.

AI Road Map / Philosophy

So what's the appeal of connecting model-checking and agent-based-modeling with agentic-AI anyway? Let's start with a list of things that would be nice to have:

- Structured workflows & workflow composition,

- Agent coordination & intelligent routing between agents,

- Agents that can directly track, update, and inspect their own knowledge and beliefs

More controversially.. maybe a lot of this boils down to a small number of hard questions: How to do more explicit domain modeling? How exactly should we hybridize LLMs / symbolics? How can we get a better layer of persistent metadata, ideally one that can represent things like confidence, topology, and traces?

Discovering bugs automatically from specifications that are automatically generated from code is the dream of course, and it's starting to seem closer13!

There is also (gestures vaguely) all of the other stuff that we need to build around our amazing new stochastic oracles, so that we can stack them up into systems that are reliable and predictable. One recent write-up that's touching on lots of these ideas argues that AGI is now an Engineering Problem17, and that's well worth a read.

But you could argue that chain-of-code16 as an alternative to chain-of-thought, emerging superstructure in agent-frameworks18, increasing interest in world-models19 and really even MCP in general20, are all evidence of movement in this direction.

AI Features / WIP / Wish List

The rest of this section is about how py-mcmas (or something like it) might fit in.

Cite

@software{py-mcmas,

author = {mvr},

title = {PY-MCMAS: ISPL Generation Tools & Wrapper for MCMAS},

year = {2025},

version = {v1.0.0},

url = { https://mattvonrocketstein.github.io/py-mcmas },

note = {Accessed: 2025-07-30},

}

References

-

MCMAS: an open-source model checker for the verification of multi-agent systems, Lomuscio et al 2015 (pdf) ↩↩

-

Engine source mirror (github) ↩

-

Official user-manual (pdf) ↩

-

The container8 is managed by the

python-dockerSDK9, but you still need a docker daemon ready (or available at DOCKER_HOST). ↩ -

For a look at the container py-mcmas uses, see docker-mcmas.git. ↩